John Tsiombikas nuclear@mutantstargoat.com

7 September 2014

Edit: I have since ported this test program to use LibOVR 0.4.4, and works fine on both GNU/Linux and Windows.

Edit2: Updated the code to work with LibOVR 0.5.0.1, but unfortunately they removed the handy function which I was using to disable the obnoxious "health and safety warning". See the oculus developer guide on how to disable it system-wide instead.

I've been hacking with my new Oculus Rift DK2 on and off for the past couple of weeks now. I won't go into how awesome it is, or how VR is going to change the world, and save the universe or whatever; everybody who cares, knows everything about it by now. I'll just share my experiences so far in programming the damn thing, and post a very simple OpenGL test program I wrote last week to try it out, that might serve as a baseline.

It's the early days of DK2 really, and it definitely shows. There’s a huge list of issues to be ironed out software-side by Oculus, and DK2 development is a bit of a pain in the ass at the moment.

First and foremost, there's no GNU/Linux support in the oculus driver/SDK (current version 0.4.2) yet. That's what we get for relying on proprietary crap, so there's no point in whining about it. We should bite the bullet and write a free software replacement or shut the fuck up really. OpenHMD looks like a good start, although it still only does rotation tracking, and doesn't utilize the IR camera to calculate the head position. Haven't tried it yet because I'm lazy, but using and improving it is the only future-proof way to go really. For now I'm stuck on my old nemesis: windows, due to the oculus sdk.

Second issue, is that even on windows, the focus up to now is really Direct3D 11 support. The oculus driver does a weird stunt to let programs output to the rift display, without having it act as another monitor that shows part of the desktop and confuse users. That mode is called "direct-to-rift" and it really doesn't work at all for OpenGL programs at the moment. As I don't have any intention of hacking Direct3D code again in my life (I've had my fill in the past), I'm stuck in extended desktop mode, and I’m also missing a couple of features that are still D3D-only in the SDK. Hopefully this too will be fixed soon. Again, proprietary software problems, so not much that can be done about it other than begging oculus to fix it in the next release.

There are other, relatively minor issues, like a really annoying and completely ridiculous health and safety warning that pops up every time an application initializes the oculus rift, but I'll not dwell on how stupid that is, since it thankfully can be disabled by calling a private internal SDK function (see example code at the end).

So anyway, onwards to the SDK and how to program for the rift. One huge improvement since the last time I did anything for the Oculus Rift DK1 with SDK 0.2.x, is the new C API they've added. The old ad-hoc set of C++ classes was really a joke, and even though the new API isn't exactly what I'd call elegant, it's definitely much more usable.

With the old SDK, one would have to render each eye's view to a texture using the projection matrix and orientation quaternions supplied by the SDK. Then draw both images onto the display itself taking care to pre-distort the image in order to counteract the pincushion effect produced by the rift's lenses. The distortion algorithm was specified in the documentation as a barrel distortion, with specific radial scaling parameters, and an accompanying sample HLSL shader program to implement it, but was otherwise left to the application.

The new SDK, while still being able to work in a similar way (which is now dubbed: "client distortion rendering" in the documentation), also provides a higher level interface (SDK-based distortion) which given the rendered texture(s), takes care of presenting it properly distorted and corrected onto the rift's display. The benefits of this new approach are obviously that future releases may improve the distortion and chromatic abberation correction algorithms without extra effort on the app-side, but also since the SDK now knows the time interval between requesting head-tracking information and final drawing to the HMD, it can do funky hacks to reduce apparent latency, and make the VR experience much smoother.

Here's an overview of the tasks required by the application, to utilize the oculus rift through the Oculus SDK (0.4.2):

ovr_Initialize and ovrHmd_Create.WindowPos in ovrHmd structure),

and make it fullscreen there.ovrHmd_ConfigureTracking.ovrHmd_GetFovTextureSize.ovrGLTexture structure by filling it with information about

the framebuffer texture(s) we created and the area in them which will

contain each image. This will be passed to the ovrHmd_EndFrame function

during our drawing loop to let the SDK distort and present our renderings

to the user.ovrGLConfig

structure and passing it to ovrHmd_ConfigureRendering.ovrHmd_BeginFrame and bind the render target fbo.ovrMatrix4f_Projection and use it.ovrHmd_GetEyePose.

It returns a structure containing a translation vector and a rotation

quaternion, which we'll have to set/multiply with our view matrix.ovrHmd_EndFrame giving it a pointer to the ovrGLTexture

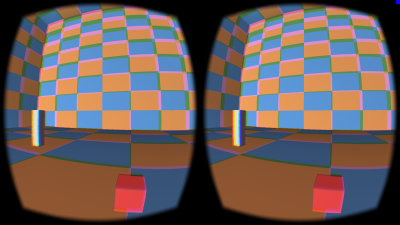

structures we filled in earlier describing our rendertarget texture(s).I wrote a very simple test program (see screenshot at the top of this article), demonstrating all of the above and released it as public domain. You may grab the code from my mercurial repository: http://nuclear.mutantstargoat.com/hg/oculus2. To compile it you'll need the Oculus SDK library, SDL2, and GLEW. The repo includes VS2013 project files to build on windows. As soon as the GNU/Linux version of the Oculus SDK is released, I'll port it and push an updated version.

In closing, I just have to mention a new project I started last week called libgoatvr. It's a VR abstraction library, which presents a simple unified API for VR programs, while supporting multiple runtime-switchable backends such as the oculus SDK and OpenHMD. I intend to base all my future VR projects on this library, so that I can switch back and forth between the various VR backends effortlessly, to try new features, avoid vendor lock-in, and stop relying solely on the whims of a proprietary SDK developer.

This was initially posted in my old wordpress blog. Visit the original version to see any comments.